News

Machine Learning solution for Predictive Vehicle Maintenance

In this article we are going to explain our Machine Learning solution for predictive maintenance based on DTC codes. Current status of the application on the EVOLVE platform will be given as well.

I. SUMMARY

Koola.io created Machine Learning model for Predictive Vehicle Maintenance that is based on two input parameters: DTC codes and actions that are taken based on historical data (e.g., what was repaired when specific DTC code was triggered). Six different models are created and deployed to the EVOLVE platform:

- Logistic Regression (LR)

- Linear Discriminant Analysis (LDA)

- K-Nearest Neighbors (KNN)

- Classification and Regression Trees (CART)

- Gaussian Naive Bayes (NB)

- Support Vector Machines (SVM)

At the moment Koola.io is testing two new models that will utilize TensorFlow features and very likely produce better results. In order to have better predictions it is important to test against as many models as possible.

II. DATA OVERVIEW

Koola.io receives data on a regular basis (weekly). At the moment more than 4 million records are being processed. Format of the received data are CSV files that are processed and prepared for Machine Learning on local Koola.io server. The resulting file contains 39 columns (38 columns are different numerical parameters, 1 column is “call to action” text) and as such is merged with the “big file” stored on the EVOLVE platform. Here is an example of the file (data):

III. MACHINE LEARNING MODEL

Basic algorithm of the Machine Learning model looks like this:

- Prepare data

- Separate out a validation dataset

- Set up the test harness to use 10-fold cross validation

- Build multiple different models to make predictions

- Test the models

- Select the best one

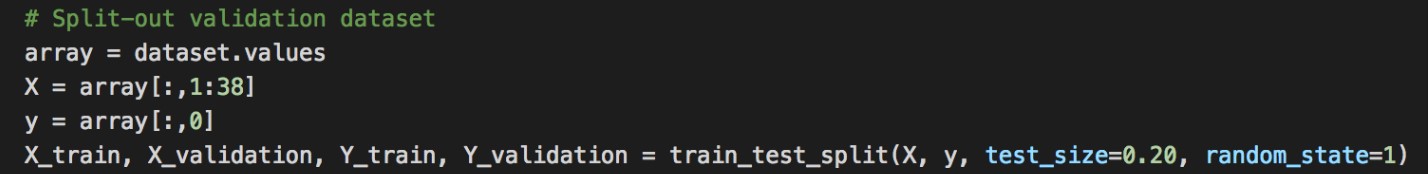

Validation dataset. In order to confirm/check how good the model is we need to have good validation dataset. It means that we are going to hold back some data that the algorithms will not get to see and we will use this data to get a second and independent idea of how accurate the best model might actually be. We will split the loaded dataset into two, 80% of which we will use to train, evaluate and select among our models, and 20% that we will hold back as a validation dataset.

We now have training data in the X_train and Y_train for preparing models and a X_validation and Y_validation sets that we can use later.

Later, we will use statistical methods to estimate the accuracy of the models that we create on unseen data. We also want a more concrete estimate of the accuracy of the best model on unseen data by evaluating it on actual unseen data.

Test Harness. We will use stratified 10-fold cross validation to estimate model accuracy. This will split our dataset into 10 parts, train on 9 and test on 1 and repeat for all combinations of train-test splits. Stratified means that each fold or split of the dataset will aim to have the same distribution of example by class as exist in the whole training dataset. We set the random seed via the random_state argument to a fixed number to ensure that each algorithm is evaluated on the same splits of the training dataset. We are using the metric of “accuracy” to evaluate models. This is a ratio of the number of correctly predicted instances divided by the total number of instances in the dataset multiplied by 100 to give a percentage (e.g., 95% accurate). We will be using the scoring variable when we run build and evaluate each model next.

Build Models. We don’t know which algorithms would be good on this problem or what configurations to use. We get an idea from the plots that some of the classes are partially linearly separable in some dimensions, so we are expecting generally good results. Here is the example how we get the result for SVM model:

Select Best Model. We now have 6 models and accuracy estimations for each. We need to compare the models to each other and select the most accurate. It is very important to test different models against different sizes of datasets.

IV. MODEL DEPLOY

As mentioned, our model is deployed to the EVOLVE platform. There are few things that need to be explained here:

- Dataset sync

- Docker image

- Argo Workflow

Dataset sync. There is an Argo Workflow created that syncs between input learning dataset on EVOLVE and new data arrived on Koola.io server. Crucial command used in this workflow is RSYNC. All we need is ssh keys in order to create connection.

Docker image. Our code is Python and we are using different Python libraries for the Machine Learning model. Once the code is ready to be deployed, we create docker image. New change in the code means new tag of the image. Created image is pushed to EVOLVE platform via private registry.

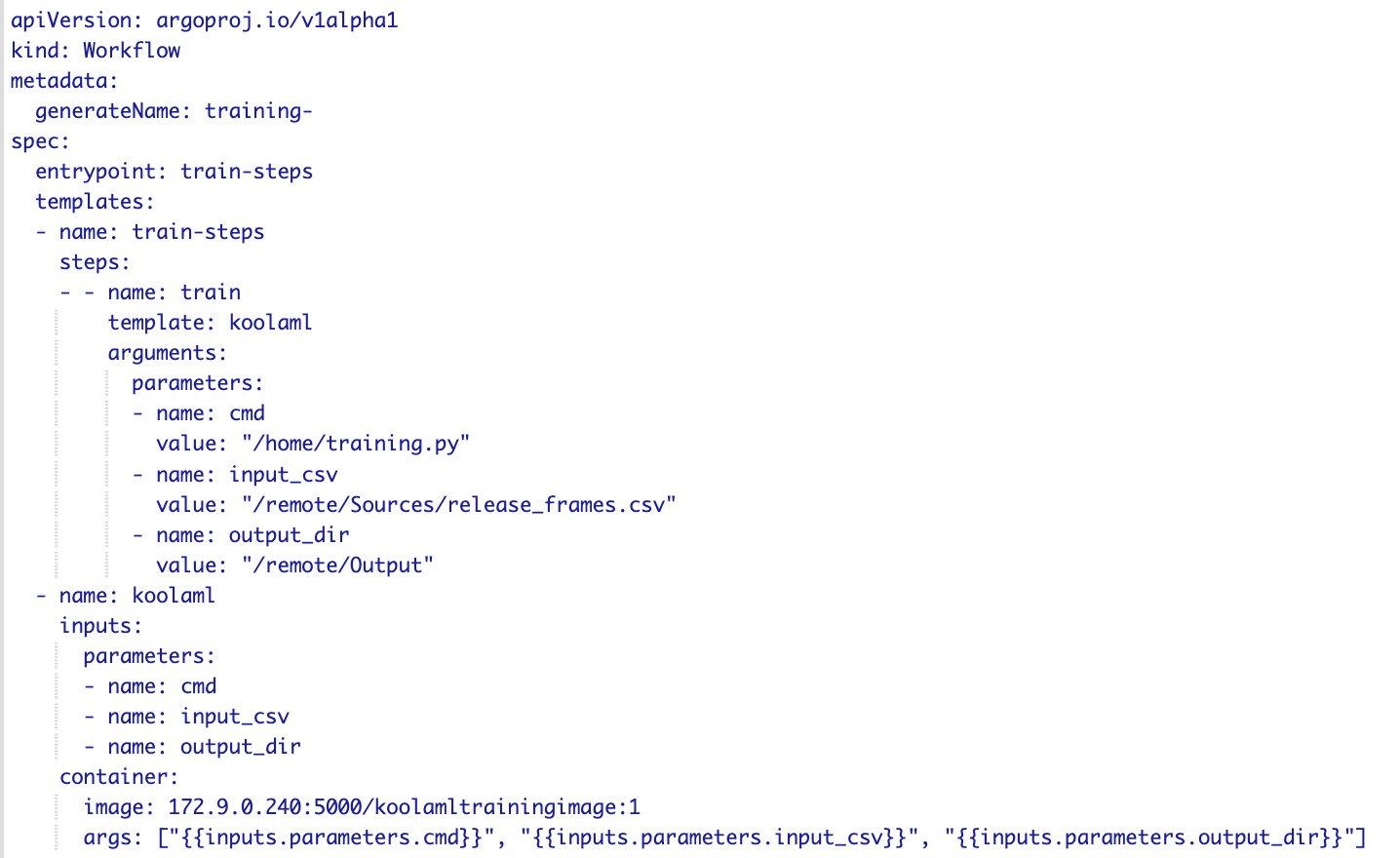

Argo Workflow. When input dataset and docker image are on the EVOLVE platform we are ready to run our training process. We are using Argo for this. It is not complicated flow. We only need to call the docker image with specific parameters and execute different models in parallel. Here is the example of the Workflow code:

V. NEXT STEPS AND CONCLUSION

TensorFlow is the library that we are taking advantage at the moment. We have created new model that is in the process of testing and new docker image is about to be created. Also, we are exploring GPU utilization on the EVOLVE platform that will be handy with TensorFlow. After successful deployment of the new method, we plan to measure different KPIs that will be compared to the existing ones. The script that will show visual differences between different methods will be written.

The result of our training algorithm is a pickle file. We plan to expose that via API that will be used on some simple UI app. We are also planning to introduce more output parameters such as cost of the repair, time needed and others.

Final conclusion is that our problem is more of a computational problem, not big data problem. As such, it was impossible to run and train on some local environment datasets that were more than 1 million records big. On the EVOLVE platform that problem is simply gone. We run 6 different models in parallel with more than 4 million records.